harsha kokel

Types of Neuro-Symbolic Systems

I attended the AAAI 2020 conference in NY, and one of the most influencing talk in that conference (for me, of course!) was the address by Prof. Henry Kautz on The Third AI Summer. In that talk, he provided some taxonomy for the future Neural and Symbolic approaches. This article is my attempt to summarize that taxonomy.

Prof. Henry Kautz, in his Robert S. Engelmore Memorial Lecture at AAAI 2020^, talked about the past and present of AI and highlighted that the future of AI is in the combination of the Neural and Symbolic approaches, alluding to the famous and heated AI debate between Prof. Gary Marcus and Prof. Yoshua Benjio. In this regard, he brings forth a taxonomy of Neuro-Symbolic Systems that I aim to elaborate upon.

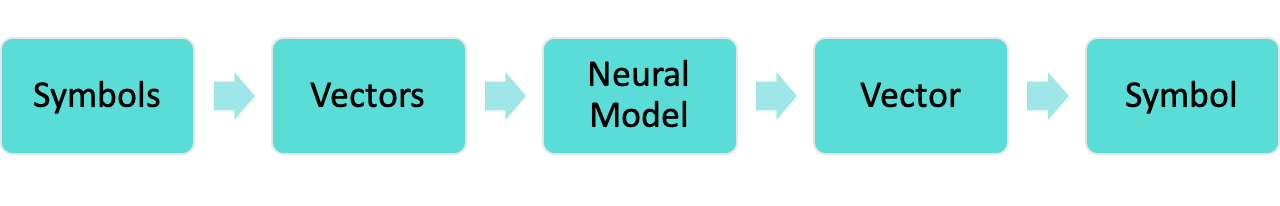

1. symbolic Neuro symbolic

This is the current standard operating procedure of deep learning, where any/all the symbols in the problem are converted to vector embeddings. Vectors are then processed by neural models, which spits out another vector which is then converted to the required symbol.

symbolic Neuro symbolic

Most of the NLP systems fall under this category since words are converted to vectors before the neural manipulations are conducted. Personally, I would not call this a Neuro-Symbolic integration.

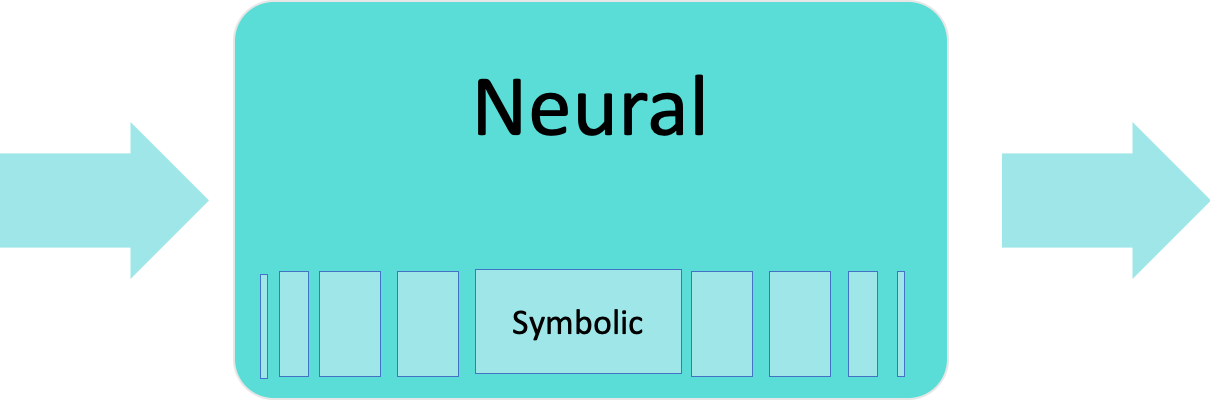

2. Symbolic[Neuro]

This is an over all symbolic solver which uses Neural model internally as subroutine for one or more function.

Symbolic[Neuro]

The example provided by Prof. Kautz includes Alpha Go system which uses a symbolic Monte-Carlo Tree search algorithm while using a neural state estimator.

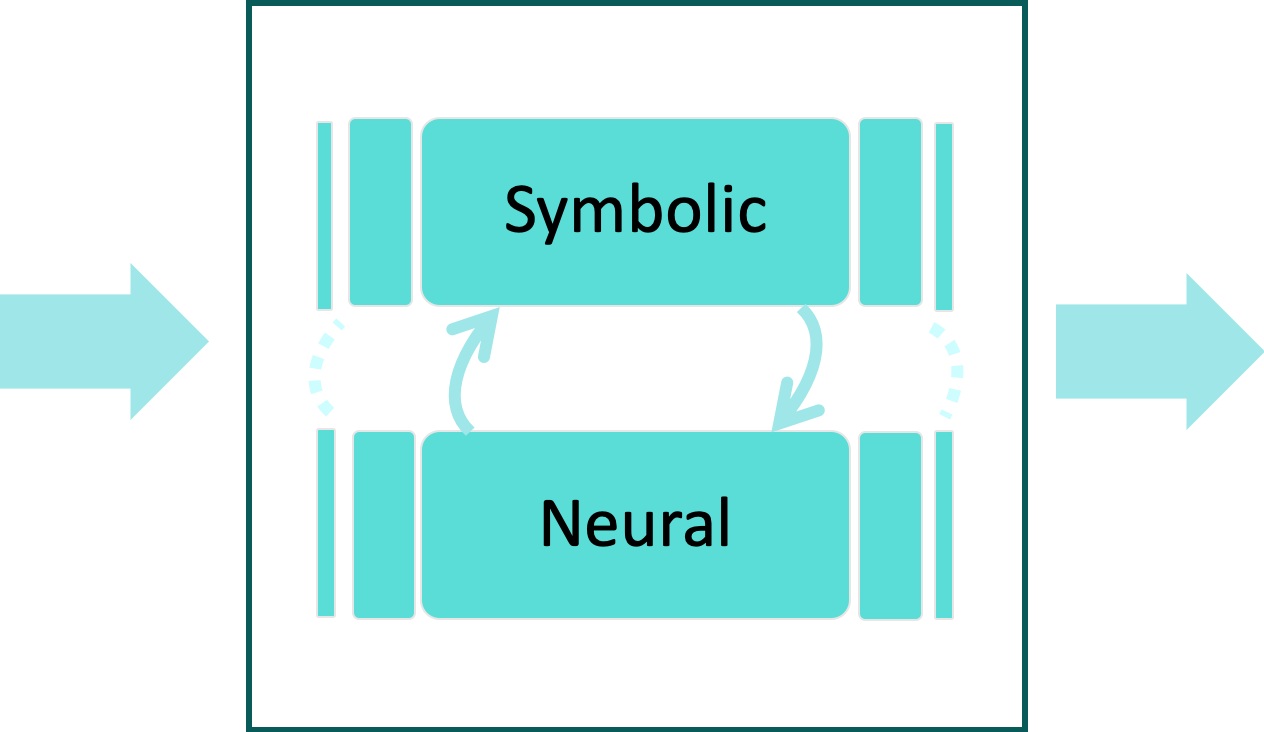

3. Neuro;Symbolic

This is a more refined integration of Neural and Symbolic approaches where the Neural and Symbolic systems are leveraged for different tasks in a big pipeline. Both systems communicate with each other either to extract information or to improve the individual/collective systems performance.

Neuro;Symbolic

The Neuro Symbolic Concept Learner by Mao et al.^ is presented as an example of this type of Neuro-Symbolic integrations since the Neural model does object detection and the symbolic reasoner (which is a GNN) does the relational reasoning. I am not quite comfortable with categorizing the GNN as symbolic systems. So, I would not consider the Mao et al. as Type 3.

I believe the Illanes et al. ICAPS 2020^ and other related work are a better candidate for Type 3 Neuro-Symbolic integration. Illanes et al. integrate the symbolic planner with the neural RL agent and both systems feed off of each other.

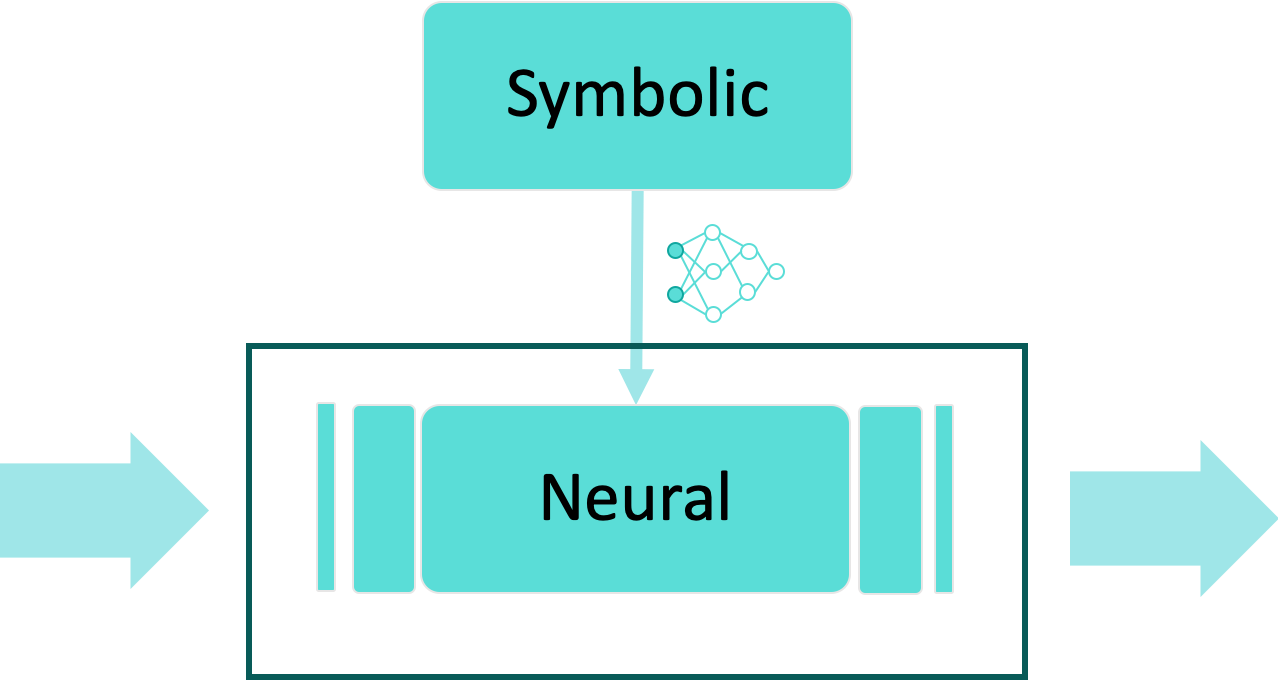

4. Neuro:Symbolic $\rightarrow$ Neuro

This type is categorized as Neuro-Symbolic systems where the symbolic knowledge is compiled into the structure of Neural models. Kautz and Lamb et al. both provide example of Lample and Charton 2020, but I fail to understand how Lample et al. is compiling the symbolic knowledge into the neural model. However, the other examples provided by Lamb et al. are quite easy to follow. Arabshahi et al. compiles the symbolic expression tree to tree LSTMs. Other works like Hitzler et al. 2004^ and Garcez et al. 2015^ compiled the logic program or if..then.. rules to a Neural Network architecture.

Neuro:Symbolic $\rightarrow$ Neuro

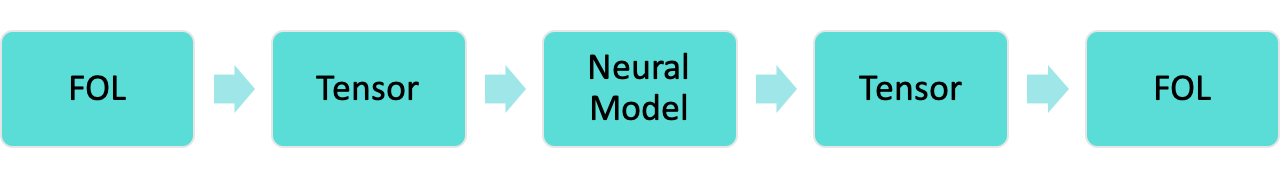

5. NeuroSymbolic

This category was not listed during the AAAI 2020 Lecture, but added in Prof. Kautz’ slides later^. This category covers all the approached where the first-order logic language is tensorized and neural methods are used to perform reasoning over this tensorized first-order logic representation. The research in this category include Logic Tensor Network [Serafini et al. 2016^], Tensor Product Representation [Smolensky et al. 2016^], Neural Tensor Network [Socher et al. 2013^], relational embeddings etc.

NeuroSymbolic

The difference between this and type 1 is that in type 1 only the objects are represented as vector embeddings. Here complete first-order language is represented by tensor embedding, including object, relation, clauses/rules, functions, predicates.

6. Neuro[Symbolic]

Finally, the last one is the transpose of Type 2. Here, the overall Neural model performs symbolic reasoning by either learning the relations between the symbols or paying attention to selected symbols at certain point. This is exactly what the Graph Neural Network do. So I think GNN is synonymous to Type 6 neuro-symbolic integration. This is also the argument made by Lamb et al. 2020^.

Neuro[Symbolic]

I prefer calling the GNN as type 6 Neuro-Symbolic integration then calling GNN as symbolic reasoners. I believe symbolic reasoners, in general, have more reasoning capability than GNN. So, calling GNN as symbolic reasoner in my opinion is a stretch.

References

- Robert S. Engelmore Memorial Lecture at AAAI 2020 by Henry Kautz. The Third AI Summer

- Lamb et al. Graph Neural Networks Meet Neural-Symbolic Computing: A Survey and Perspective. IJCAI, 2020

- Arabshahi et al. Combining Symbolic Expressions and Black-box Function Evaluations in Neural Programs. ICLR, 2018

- Hitzler et al. Logic programs and connectionist networks. Journal of Applied Logic, 2004

- Garcez et al. Neural-symbolic learning and reasoning: contributions and challenges. AAAI Spring Symposium Series. 2015

- Smolensky et al. Basic Reasoning with Tensor Product Representations. arXiv:1601.02745, 2016

- Serafini et al. Logic Tensor Networks: Deep Learning and Logical Reasoning from Data and Knowledge. arXiv:1606.04422, 2016

- Socher et al. Reasoning With Neural Tensor Networks For Knowledge Base Completion. NeurIPS, 2013

- Illanes et al. Symbolic Plans as High-Level Instructions for Reinforcement Learning. ICAPS 2020

- Mao et al. The Neuro-Symbolic Concept Learner: Interpreting Scenes, Words, and Sentences From Natural Supervision. ICLR 2019