harsha kokel

Relational Reinforcement Learning

My notes on Džeroski, Sašo, Luc De Raedt, and Kurt Driessens, Machine Learning 2001

The paper came before the goal-conditioned RL, Multi-task RL or Graph Neural Network literature. Major motivation of this paper is to learn a generalizable policy. Generalization in terms of varying number of objects in the domain (for example, in blocks-world number of blocks can change) or change in the goal state (for example, stack red block on blue block instead if green on yellow).

Authors demonstrate that by using approaches from inductive logic programming literature, first-order policy can be learnt which naturally supports both the generalization discussed above.

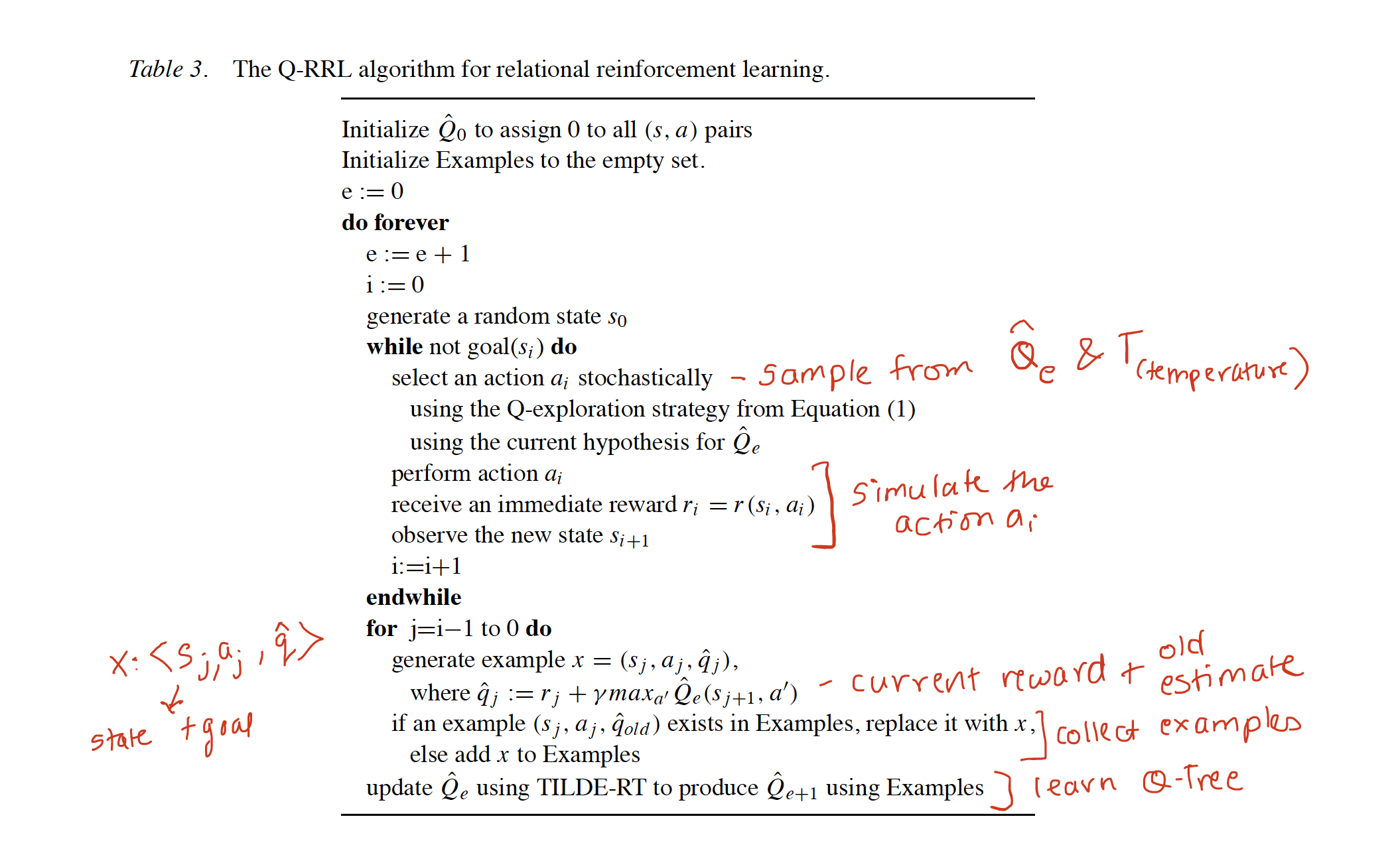

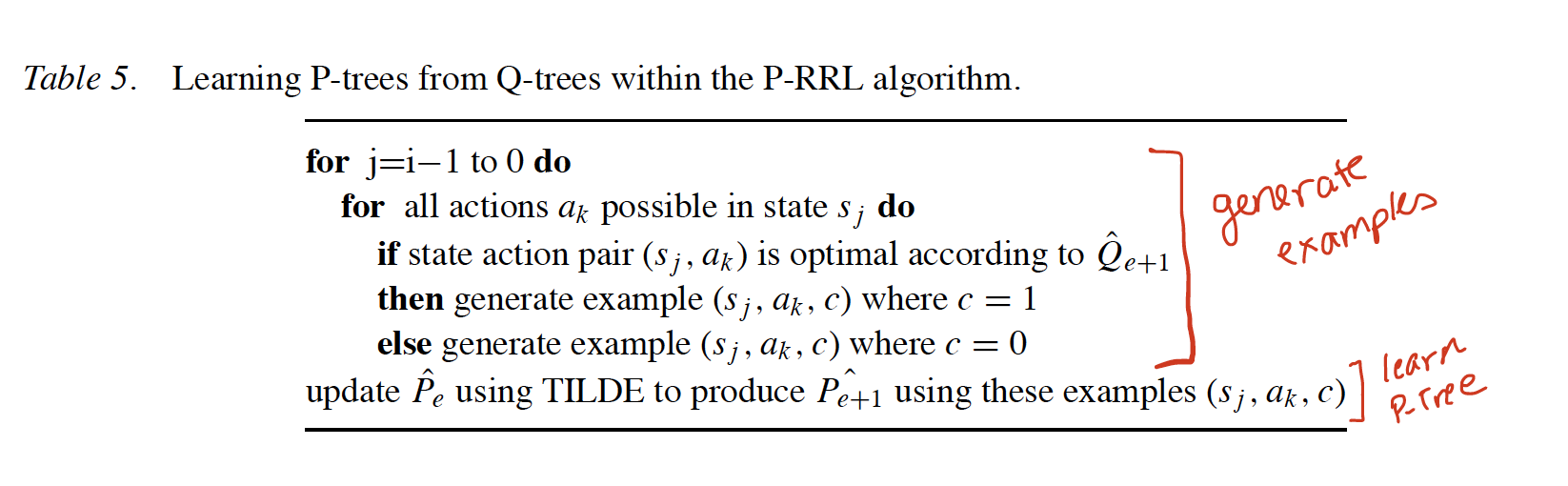

In particular, author propose to learn Q-Tree i.e. TILDE-RT (Top-down Induction of Logical decision trees for regression) as Q-Function which take the state and action pair and predict q values. The policy function, P-Tree, can then be induced from Q-Tree.

Following Q-RRL algorithm is proposed to learn Q-Tree, which updates the TILDE-RT after every episode. Data set used to learn the TILDE-RT is generated by exploring the environment and P-RRL algorithm is proposed for inducing P-Tree from Q-Tree

Critique

- Proposed solution does not seem to scale well specifically because the Logical programs do not scale with higher number of data points or high dimensional data.

- The relational trees might be able to generalize to various number of blocks but I think it will not generalize to different goals. For e.g. if all the training examples had

goal(on(.,.))and if the test examples havegoal(clear(.)), I do not think the TILDE-RT will be able to achieve that goal. - With Graph Neural Network and goal-conditioned RL, both the generalizations targeted by Q-RRL are achieved in a scalable manner. So, the only additional benefit RRL really has is the use of domain knowledge, which comes from ILP.